- Blog

Smarter Air Interfaces: AI/ML in 5G and 6G RAN

Artificial Intelligence and Machine Learning (AI/ML) are playing an increasingly vital role in the 5G New Radio (NR) air interface—and will be even more integral to 6G—due to the rising complexity, dynamic environments, and stringent performance requirements of modern wireless networks. As networks become more intricate, traditional rule-based algorithms often struggle to adapt in real time. In contrast, AI/ML can learn from patterns and dynamically optimize performance. Notably, 6G is expected to feature an AI-native architecture, where AI is not merely an add-on but deeply embedded within the protocol stack itself.

In this respect, 3GPP has studied AI/ML functionalities over multiple releases. In this blog, let’s first understand a few basic concepts and an overall framework that should be applicable across the use cases. Then we try to understand a few specific interesting use cases of AI/ML in the Radio Access Network (RAN) air interface – these are Channel State Information (CSI) feedback enhancement, beam management, and position accuracy enhancement.

Deployment Model – one sided or two sided

In the context of AI/ML standardization efforts, two primary deployment models have emerged: one-sided and two-sided AI/ML models.

For One-sided model, the inference happens entirely at one-end that is either at network or at user equipment (UE). For example, a UE might use a local model to predict optimal beam directions based on its measurements, or the network might use its own model to manage resources based on aggregated data.

In contrast, in a two-sided model joint inference is performed, i.e., the first part of inference is firstly performed by UE and then the remaining part is performed by gNodeB, or vice versa.

Ensuring that models from different vendors work seamlessly together is a key challenge, which is why 3GPP is developing standardized frameworks for signaling, model transfer, and performance monitoring.

Life-Cycle Management (LCM) approaches

Secondly, two distinct approaches to AI/ML LCM have been defined: functionality-based LCM and model ID-based LCM.

Functionality-based LCM centers around managing AI/ML-enabled features or “functionalities” rather than specific models. Here, the network interacts with the user equipment (UE) by signaling the activation, deactivation, switching, or fallback of a particular AI/ML functionality. The actual model used in the UE (in a one-sided model or UE part in two-sided model) to implement the functionality may remain opaque to the network.

On the other hand, model ID-based LCM involves explicit identification and management of AI/ML models using unique model IDs. These IDs serve as standardized references that both the network and UE recognize, enabling precise control over model selection, activation, switching, and updates. This method supports more granular lifecycle operations and is particularly useful in two-sided AI/ML deployments, where coordinated inference between network and UE is required.

Key LCM Functional Blocks

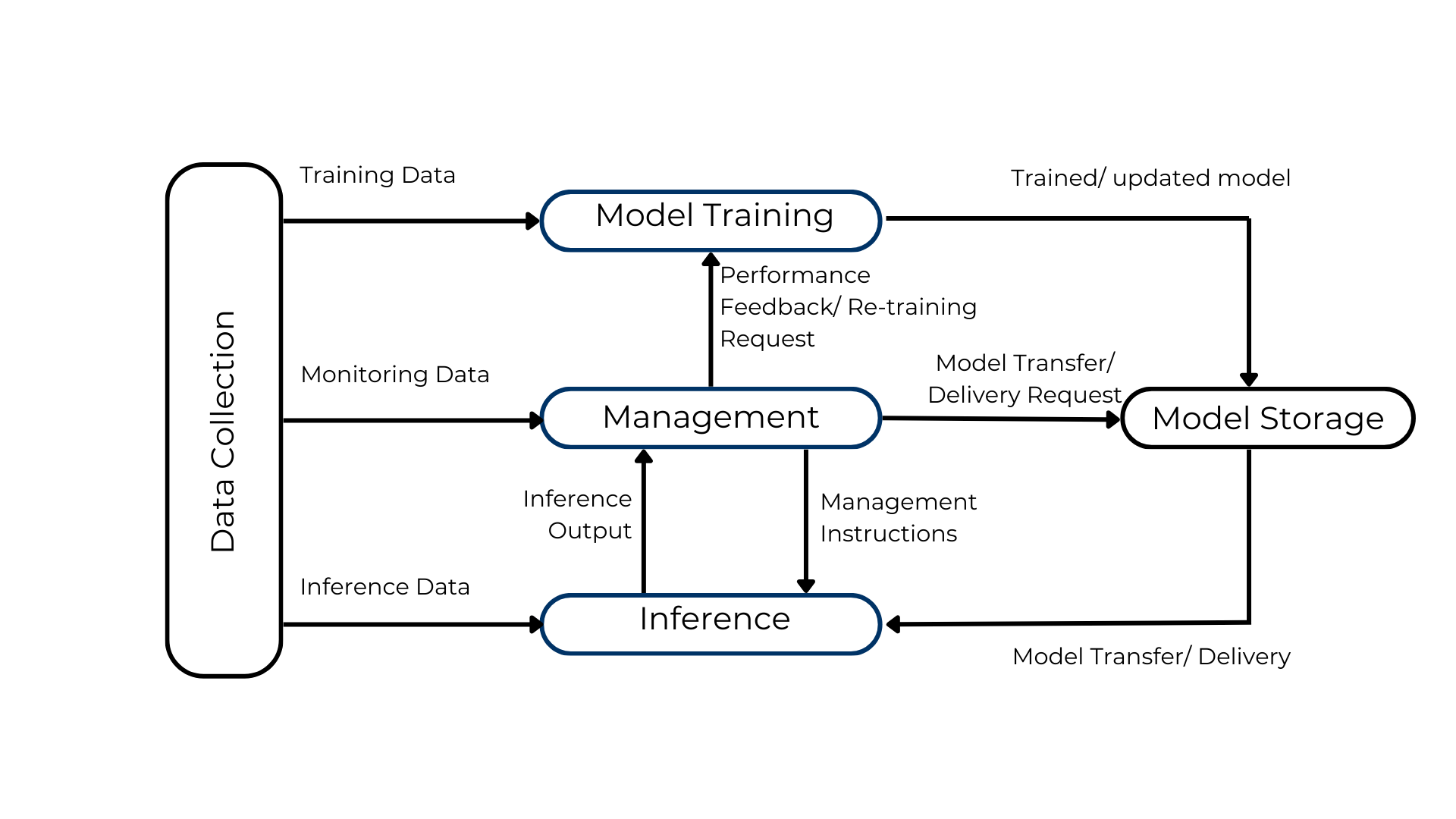

Next, let’s understand some standard functional blocks in overall model development and usage in the network. The following diagram from 3GPP TR 38.843 provides the visual representation of the AI-ML functional framework.

Figure 1 – Functional framework for AI/ML for NR Air Interface – 3GPP TR 38.843

The framework includes several core components:

Collaboration level between network and UE

In terms of collaboration between the UE and the network for AI/ML operation, 3GPP has outlined three distinct levels of collaboration:

Model Transfer refers to the delivery of an AI/ML model over the air interface in a way that is not transparent to 3GPP signaling. This may involve transmitting either the parameters of a known model structure at the receiving end or an entirely new model along with its parameters. The delivery can include a complete model or just a partial one.

So here, while the network and UE communicate to coordinate AI/ML tasks, the actual models remain local and are not exchanged over the air interface.

The above building blocks highlights the flexibility in terms of developing/ deploying the AI ML model; at the same time, it indicates the complexities involved. It’ll be interesting to observe the approach chosen by the vendor community in terms of actual deployment over the next few years.

Having understood some basic building blocks and structure, lets understand a few concrete use cases on the applicability of AI ML in 5G/6G RAN.

Channel State Information (CSI) Compression and Prediction

Channel State Information (CSI) describes how a signal propagates from the base station (gNodeB) to the user equipment (UE). This information is critical for optimizing beamforming, scheduling, and link adaptation.

The standard procedure for CSI feedback is as follows. Channel State Information Reference Signal (CSI-RS) is transmitted by the gNodeB (the 5G base station). UE uses it to estimate the channel in terms of parameters such as signal strength, interference, and fading characteristics. Based on these measurements, the UE generates Channel State Information (CSI) and sends it back to the gNodeB. As CSI is quite huge (even before 5G advancements such as MIMO) the UE traditionally compresses it using Codebooks (predefined sets of precoding matrices) or other dimensionality reduction processes. The compressed CSI is sent back to the gNodeB via uplink control channels. Then the gNodeB reconstructs the channel information and uses it for beamforming and scheduling. Now with 5G advancements, as the number of antennas, bandwidth and layers are increasing, the CSI is becoming extremely large.

In AI/ML-based CSI compression, the user equipment (UE) uses a machine learning-based encoder to generate compressed CSI feedback. This feedback is then sent to the gNodeB, where a corresponding ML-based decoder reconstructs the original CSI. This setup is a two-sided AI/ML model, where the inference process is distributed between the UE and the gNodeB.

CSI prediction is the process of forecasting future channel conditions based on past and current CSI measurements. Instead of always waiting for the UE to send updated CSI, the gNodeB tries to predict what the channel will look like in the near future. It reduces the feedback overhead. It is also beneficial in low latency applications where proactive adaptation can be done instead of reactive adjustments.

Beam Management

Beam management refers to the set of procedures used to establish, maintain, and optimize directional beams between the gNodeB and the user equipment (UE). It is particularly essential for high-frequency bands like mmWave.

Traditionally, Beam Management involves the following steps at a very high level: the gNodeB transmits signals in multiple directions using different beams; UEs measure the quality of received beams and report back to gNodeB; then the gNodeB selects the best beam based on UE reports.

Scanning and selecting the best beam from a large set of possibilities can be resource-intensive and time consuming. To address these challenges, 3GPP has introduced AI/ML-based enhancements to optimize beam management operations. AI/ML-based beam management uses machine learning models to predict the best beam for communication, reducing the need for exhaustive measurements and feedback.

There are two main prediction domains:

Spatial-Domain Beam Prediction: Predicts the optimal beam direction based on spatial features like UE location, angle of arrival etc. and measurements of fewer narrow/ wide beams.

Time-Domain Beam Prediction: Forecasts future beam conditions based on temporal patterns, helping maintain connectivity during mobility or dynamic channel changes.

AI/ML beam management can be implemented in different collaboration setups:

Position accuracy enhancement

In 3GPP Release 18, AI/ML-based positioning accuracy enhancement has emerged as a key use case for improving location services in 5G NR, especially in challenging environments like indoor factories where traditional methods struggle.

Two primary AI/ML-based positioning strategies are suggested:

Tejas Networks has been an active contributor to 3GPP standardization efforts, particularly in the areas of AI/ML integration within 5G and emerging 6G technologies and driving the development of AI-native architectures that will underpin next-generation wireless networks.

References:

In this respect, 3GPP has studied AI/ML functionalities over multiple releases. In this blog, let’s first understand a few basic concepts and an overall framework that should be applicable across the use cases. Then we try to understand a few specific interesting use cases of AI/ML in the Radio Access Network (RAN) air interface – these are Channel State Information (CSI) feedback enhancement, beam management, and position accuracy enhancement.

Deployment Model – one sided or two sided

In the context of AI/ML standardization efforts, two primary deployment models have emerged: one-sided and two-sided AI/ML models.

For One-sided model, the inference happens entirely at one-end that is either at network or at user equipment (UE). For example, a UE might use a local model to predict optimal beam directions based on its measurements, or the network might use its own model to manage resources based on aggregated data.

In contrast, in a two-sided model joint inference is performed, i.e., the first part of inference is firstly performed by UE and then the remaining part is performed by gNodeB, or vice versa.

Ensuring that models from different vendors work seamlessly together is a key challenge, which is why 3GPP is developing standardized frameworks for signaling, model transfer, and performance monitoring.

Life-Cycle Management (LCM) approaches

Secondly, two distinct approaches to AI/ML LCM have been defined: functionality-based LCM and model ID-based LCM.

Functionality-based LCM centers around managing AI/ML-enabled features or “functionalities” rather than specific models. Here, the network interacts with the user equipment (UE) by signaling the activation, deactivation, switching, or fallback of a particular AI/ML functionality. The actual model used in the UE (in a one-sided model or UE part in two-sided model) to implement the functionality may remain opaque to the network.

On the other hand, model ID-based LCM involves explicit identification and management of AI/ML models using unique model IDs. These IDs serve as standardized references that both the network and UE recognize, enabling precise control over model selection, activation, switching, and updates. This method supports more granular lifecycle operations and is particularly useful in two-sided AI/ML deployments, where coordinated inference between network and UE is required.

Key LCM Functional Blocks

Next, let’s understand some standard functional blocks in overall model development and usage in the network. The following diagram from 3GPP TR 38.843 provides the visual representation of the AI-ML functional framework.

Figure 1 – Functional framework for AI/ML for NR Air Interface – 3GPP TR 38.843

The framework includes several core components:

- Data Collection: Gathers input data for training, managing, and running AI models.

- Model Training: Prepares and trains models using collected data, including validation and testing.

- Management: Oversees model operations—like activation, switching, and performance monitoring—and makes decisions based on data and inference results.

- Inference: Applies trained models to real-time data to generate outputs, which are then used for decision-making.

- Model Storage: Stores trained models for future use, though the actual storage location is flexible.

- Instruction and Feedback Flows: These include requests for model delivery, performance feedback, retraining triggers, and management instructions.

Collaboration level between network and UE

In terms of collaboration between the UE and the network for AI/ML operation, 3GPP has outlined three distinct levels of collaboration:

- Level x – No Collaboration: There’s no need for standardized support or enhancements specific to AI/ML. Each vendor is free to manage AI/ML functionalities independently.

- Level y – Signaling-Based Collaboration without Model Transfer: This level introduces standardized signaling to support AI/ML operations, but without transferring models between the network and UE.

Model Transfer refers to the delivery of an AI/ML model over the air interface in a way that is not transparent to 3GPP signaling. This may involve transmitting either the parameters of a known model structure at the receiving end or an entirely new model along with its parameters. The delivery can include a complete model or just a partial one.

So here, while the network and UE communicate to coordinate AI/ML tasks, the actual models remain local and are not exchanged over the air interface.

- Level z – Signaling-Based Collaboration with : This is the most integrated level, where AI/ML operations involve both standardized signaling and the ability to transfer models between the network and UE. Model transfer here refers to sending AI/ML models across the air interface in a way that’s recognized and supported by 3GPP standards.

The above building blocks highlights the flexibility in terms of developing/ deploying the AI ML model; at the same time, it indicates the complexities involved. It’ll be interesting to observe the approach chosen by the vendor community in terms of actual deployment over the next few years.

Having understood some basic building blocks and structure, lets understand a few concrete use cases on the applicability of AI ML in 5G/6G RAN.

Channel State Information (CSI) Compression and Prediction

Channel State Information (CSI) describes how a signal propagates from the base station (gNodeB) to the user equipment (UE). This information is critical for optimizing beamforming, scheduling, and link adaptation.

The standard procedure for CSI feedback is as follows. Channel State Information Reference Signal (CSI-RS) is transmitted by the gNodeB (the 5G base station). UE uses it to estimate the channel in terms of parameters such as signal strength, interference, and fading characteristics. Based on these measurements, the UE generates Channel State Information (CSI) and sends it back to the gNodeB. As CSI is quite huge (even before 5G advancements such as MIMO) the UE traditionally compresses it using Codebooks (predefined sets of precoding matrices) or other dimensionality reduction processes. The compressed CSI is sent back to the gNodeB via uplink control channels. Then the gNodeB reconstructs the channel information and uses it for beamforming and scheduling. Now with 5G advancements, as the number of antennas, bandwidth and layers are increasing, the CSI is becoming extremely large.

In AI/ML-based CSI compression, the user equipment (UE) uses a machine learning-based encoder to generate compressed CSI feedback. This feedback is then sent to the gNodeB, where a corresponding ML-based decoder reconstructs the original CSI. This setup is a two-sided AI/ML model, where the inference process is distributed between the UE and the gNodeB.

CSI prediction is the process of forecasting future channel conditions based on past and current CSI measurements. Instead of always waiting for the UE to send updated CSI, the gNodeB tries to predict what the channel will look like in the near future. It reduces the feedback overhead. It is also beneficial in low latency applications where proactive adaptation can be done instead of reactive adjustments.

Beam Management

Beam management refers to the set of procedures used to establish, maintain, and optimize directional beams between the gNodeB and the user equipment (UE). It is particularly essential for high-frequency bands like mmWave.

Traditionally, Beam Management involves the following steps at a very high level: the gNodeB transmits signals in multiple directions using different beams; UEs measure the quality of received beams and report back to gNodeB; then the gNodeB selects the best beam based on UE reports.

Scanning and selecting the best beam from a large set of possibilities can be resource-intensive and time consuming. To address these challenges, 3GPP has introduced AI/ML-based enhancements to optimize beam management operations. AI/ML-based beam management uses machine learning models to predict the best beam for communication, reducing the need for exhaustive measurements and feedback.

There are two main prediction domains:

Spatial-Domain Beam Prediction: Predicts the optimal beam direction based on spatial features like UE location, angle of arrival etc. and measurements of fewer narrow/ wide beams.

Time-Domain Beam Prediction: Forecasts future beam conditions based on temporal patterns, helping maintain connectivity during mobility or dynamic channel changes.

AI/ML beam management can be implemented in different collaboration setups:

- UE-side inference: The UE uses local models to predict the best beam and reports it to the network.

- Network-side inference: The gNB performs beam .

Position accuracy enhancement

In 3GPP Release 18, AI/ML-based positioning accuracy enhancement has emerged as a key use case for improving location services in 5G NR, especially in challenging environments like indoor factories where traditional methods struggle.

Two primary AI/ML-based positioning strategies are suggested:

- Direct AI/ML Positioning

- AI/ML-Assisted Positioning

Tejas Networks has been an active contributor to 3GPP standardization efforts, particularly in the areas of AI/ML integration within 5G and emerging 6G technologies and driving the development of AI-native architectures that will underpin next-generation wireless networks.

References:

- 3GPP TR 38.843, Study on Artificial Intelligence (AI)/Machine Learning (ML) for NR air interface https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=3983

- Overview of AI/ML related work in 3GPP, ETSI AI Conference 2025 https://docbox.etsi.org/Workshop/2025/02_AICONFERENCE/SESSION05/3GPPRAN_MONTOJO_JUAN_QUALCOMM.pdf

- An Overview of AI in 3GPP’s RAN Release 18: Enhancing Next-Generation Connectivity?, IEEE ComSoc https://www.comsoc.org/publications/ctn/overview-ai-3gpps-ran-release-18-enhancing-next-generation-connectivity

- Deep Learning for CSI Feedback: One-Sided Model and Joint Multi-Module Learning Perspectives, Samsung Research https://research.samsung.com/blog/Deep-Learning-for-CSI-Feedback-One-Sided-Model-and-Joint-Multi-Module-Learning-Perspectives